-

Posts

1302 -

Joined

-

Last visited

-

Days Won

251

Content Type

Forums

Store

Downloads

Events

Everything posted by DarrenWhite99

-

One thing that comes to my mind is that the way the role works, it is looking for volumes where protection is active. If you have bypassed Bitlocker for 1 or more reboots (manage-bde -protectors -disable C:) then the role will NOT report that BitLocker is enabled even though it is present and the volume is fully encrypted.

-

-

Domain Computers without Automate Agent

DarrenWhite99 commented on DarrenWhite99's file in Internal Monitors

Can you define "stopped working"? No results? Or Build and View fails? If you don't have the latest version, you should re-download and install it as I improved the speed. It is looking for computers that have logged into the AD Domain "recently". If you AD plugin has broken somehow and you aren't getting updated computer information, the monitor will not find any agents to report. -

-

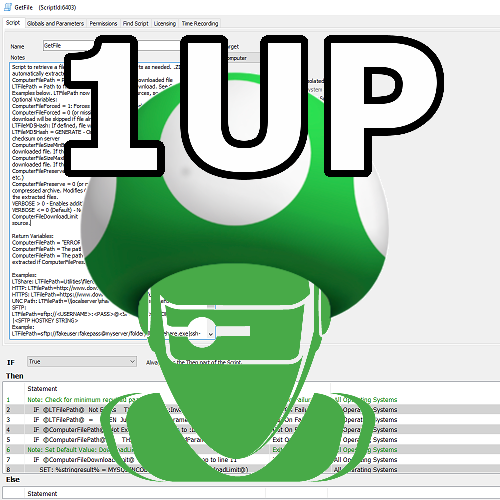

You shouldn’t run a script you can’t see, but there is no reason you shouldn’t be able to read the script. You import the standard way by going to System-> Import-> XML File. Try importing twice (no, it shouldn’t matter) and restart Control Center. Then locate and open the script. If you still have trouble maybe jump into the LabTechGeek Slack, and ask in the Scripting channel.

-

I'm going with "No". Computers.version contains the "Service Pack x" string, so that is the right place to be checking for it. Try running the following query: SELECT version FROM computers WHERE computerid=XXX with XXX being the correct computer id. Or it might be helpful to run the script using the debugger to learn more about what is going on. (After that step executes, the internal variable %sqlresult% should have the value returned by the query, you can see if the service pack is listed).

-

Domain Computers without Automate Agent

DarrenWhite99 commented on DarrenWhite99's file in Internal Monitors

The 2.0 version is between 3 and 60 times faster (It dropped my queries from 6-15 minutes down to 15-30 seconds) and includes the OS limit. I think I may have accidentally uploaded the wrong file for 1.0.1, because when I downloaded it I didn't see the OS limit in there. Give the new version a try and it should be much faster in addition to excluding non Windows OS computers. -

-

Roles are automatically detected for agents. The roles will become assigned to agents once the update config and resend system inventory (should happen within a 24 hour period). Nothing is specifically needed to enable the roles. Creating a Search and an AutoJoin group is separate, if you wish to do so. I just use the roles within scripts which are not specific for Dell necessarily. If I wanted the script to only run for Dell servers I can just check if the "Dell Server" role is not assigned and then exit.

-

Roles are automatically detected for agents. The roles will become assigned to agents once the update config and resend system inventory (should happen within a 24 hour period). Nothing is specifically needed to enable the roles. Creating a Search and an AutoJoin group is separate, if you wish to do so. I just use the roles within scripts which are not specific for HP necessarily. If I wanted the script to only run for HP I can just check if the HP Server role is not assigned and then exit.

- 3 comments

-

- role definition

- role detection

-

(and 3 more)

Tagged with:

-

Version 1.0.2

42 downloads

The IP for a Hosted Automate Server is not guaranteed to remain fixed, but if you do not include the IP in your Server Address template setting, your agents will not be able to communicate if DNS isn't working. See https://docs.connectwise.com/ConnectWise_Automate/ConnectWise_Automate_Knowledge_Base_Articles/Server%3A_Moving_to_a_New_FQDN for instructions on configuring alternate server addresses. (Official Statement concerning Hosted Server IP Addresses - https://docs.connectwise.com/ConnectWise_Automate/ConnectWise_Automate_Knowledge_Base_Articles/Cloud%3A_Cloud_Server_IP_Address) This script is a CLIENT script, which can be scheduled to run once or a few times each day. It will determine which probes are online and begin asking them to resolve the IP of your Automate server, and will continue asking agents until at least 5 agents have responded with the same IP. Once the IP has been determined, any templates that include an IP address but do not include the current IP will be flagged as needing an update. Because this solution is distributed and works without any LTServer commands it is suitable for use in Hosted and On-Premise environments. After importing the script, you must set the hostname and email address for notifications inside the script. To schedule the script, go to Dashboard -> Management -> Scheduled Client Scripts and add the script with whatever schedule you like. Here is a suggested schedule: -

Active Directory Plugin Credential Status Monitor

DarrenWhite99 commented on DarrenWhite99's file in Internal Monitors

It isn't necessary to limit it, the monitor will automatically select only eligible agents. But by default it will include agents that are not under a service plan. To prevent alerting on agents that are not onboarded, etc. I have mine targeted to the "Service Plans.Windows Servers.Server Roles.Windows Servers Core Services.Domain Controllers" group. -

Version 1.0.0

150 downloads

This is the script that I developed to manage removal of our Managed AV. It supports Windows and OSX installations. If it does not detect the product on the Agent it will skip removal attempts. It uses some known package GUIDS and can accept a removal password. It will leverage the Agent Removal script included with the Trend Micro Plugin for Automate if you have it. If the primary removal steps fail, it retrieves an uninstaller tool that Trend Micro released a few years ago (a copy is included in the bundle since they seem to have taken it down) to clean the system. Finally, it will try some batch/vbs script steps that I made to try and disable or cleanup the software manually. It supports a "ForcedRemoval" option that always triggers all the cleanup steps and skips testing if the product is installed. -

Version 1.0.1

1087 downloads

This script will trigger removal for the following applications (in this order): ITSupport247*Gateway ITSupport247*MSMA ITSupport247*DPMA ITSupport247* ITSPlatform LogMeIn This is just using a generic PowerShell script I made that searches for applications by name and then runs their uninstaller command. It has no specific knowledge of the applications. If the command uses msiexec, it will make sure that the action is uninstall (/x) and it will ensure it runs silently. (adds /quiet /norestart, removes any other /q* parameter). If the command is anything else, it will just add the "/s" parameter if there is not already a parameter like "/s*" in the command. -

Version 1.0.1

159 downloads

The AD Plugin will not operate properly if credentials are missing or are not valid. This monitor will report any Domain Controller Infrastructure Master that does not have valid credentials associated with it. This is the same information available in the plugin, but you can be automatically notified when there is a problem instead of having to check it manually. The monitor is configured to only match agents with the "Domain Controller Infrastructure Master" role, so you can leave the targeting to all agents. If you only want to monitor systems that are under a service plan, you may choose to target a group such as: "Service Plans.Windows Servers.Server Roles.Windows Servers Core Services.Domain Controllers". Thank You to @Jacobsa for the suggestion! -

Version 1.0.0

23 downloads

This script takes a filename to capture as a parameter. The output is a PowerShell script capable of restoring the original file. The output will be no more than 70 characters wide and is Base64 encoded. The original filename and path is also Base64 encoded Unicode, so any valid file (even with international or Emoji characters) can be captured. The file timestamp is also captured and restored. This is meant as an alternative way to capture binary content that can be transmitted as plain text and used to easily re-create the file just by running a script. The capture and restore has been tested with PoSH2/Server2003 and Windows 10. Example to Capture: powershell.exe -file captureportablefile.ps1 "c:\filetoretrieve.dat" > restorefile.ps1 The contents can also be placed in the "Execute Script" function with a filename specified as a parameter. (Include quotes around the path if it has spaces). The output script will save the file with the original name\path, or it will accepts a filename parameter for the new file to save to. The output can be used directly in "Execute Script" to re-create the file, on a different agent for example. The script will indicate success or failure restoring the file. Example to restore: powershell.exe -file restorefile.ps1 Example to restore to a different path: powershell.exe -file restorefile.ps1 "c:\newfiletosave.dat" Here is en example of the script output. Running the script below will create a file named "C:\Windows\Temp\Have a ☺ day.zip" $Base64FileName = @' QwA6AFwAVwBpAG4AZABvAHcAcwBcAFQAZQBtAHAAXABIAGEAdgBlACAAYQAgADomIABkAGEAeQA uAHoAaQBwAA== '@ $TimeStamp=[DateTime]636604097389163155 $Base64Contents = @' UEsDBAoAAAAAAP0Am0zn48F+HAAAABwAAAAZAAAASGF2ZSBhIGhhcHB5IGRheS1BTlNJLnR4dEp 1c3Qgc29tZSA/pD8/Pz8/Pz8gc3R1ZmYuDQpQSwMECgAAAAAA6QCbTORcBzs6AAAAOgAAABwAAA BIYXZlIGEgaGFwcHkgZGF5LVVuaWNvZGUudHh0//5KAHUAcwB0ACAAcwBvAG0AZQAgADomPCZrJ jsmQiZqJmAmYyZAJiAAcwB0AHUAZgBmAC4ADQAKAFBLAwQKAAAAAADxAJtMeDSP5jEAAAAxAAAA GQAAAEhhdmUgYSBoYXBweSBkYXktVVRGOC50eHTvu79KdXN0IHNvbWUg4pi64pi84pmr4pi74pm C4pmq4pmg4pmj4pmAIHN0dWZmLg0KUEsBAj8ACgAAAAAA/QCbTOfjwX4cAAAAHAAAABkAJAAAAA AAAAAgAAAAAAAAAEhhdmUgYSBoYXBweSBkYXktQU5TSS50eHQKACAAAAAAAAEAGACFbKN39t3TA UCsDnT23dMB8s/tc/bd0wFQSwECPwAKAAAAAADpAJtM5FwHOzoAAAA6AAAAHAAkAAAAAAAAACAA AABTAAAASGF2ZSBhIGhhcHB5IGRheS1Vbmljb2RlLnR4dAoAIAAAAAAAAQAYANODVGD23dMB0T1 GLPbd0wHRPUYs9t3TAVBLAQI/AAoAAAAAAPEAm0x4NI/mMQAAADEAAAAZACQAAAAAAAAAIAAAAM cAAABIYXZlIGEgaGFwcHkgZGF5LVVURjgudHh0CgAgAAAAAAABABgAYx1uafbd0wFjHW5p9t3TA YN/S2n23dMBUEsFBgAAAAADAAMARAEAAC8BAAAAAA== '@ $FileName=[System.Convert]::FromBase64String($Base64FileName) $FileName=[System.Text.Encoding]::Unicode.GetString($FileName) If(($Args) -ne $Null) { If($Args[0] -match '^[^\*\?]+$') {$FileName=$Args[0]} Else {Write-Output 'Invalid Filename.';break} } Try { $FileContents = [System.Convert]::FromBase64String($Base64Contents) $Null=Mkdir $(Split-Path -Path $FileName) -EA 0 Set-Content -Literal $FileName -Value $FileContents -Encoding Byte (Get-Item $($FileName)).LastWriteTimeUtc=$TimeStamp Write-Output "File restore complete: $($FileName)" } Catch {Write-Output "File restore failed: $($FileName)"} -

Version 1.0.1

359 downloads

This Dataview incorporates some data from the Disk Inventory Dataview and the Computer Chassis Dataview, including Video Card, Memory, and CPU columns.. I created it when a client asked for assistance gathering this information. The first time we exported from multiple Dataviews and merged the columns in Excel. The next time they asked I made this so that 1 Dataview had all the columns that were important in this instance. Perhaps you would like these columns in a single Dataview also. The SQL is safe to import through Control Center->Tools->Import SQL. The Dataview should be added into the Inventory folder. -

Version 1.0.0

87 downloads

UPDATE - 20190411 - The stock "PROC - Bad Processes Detected" has been improved. I don't know specifically when, but when looking on an up to date system running 2019 Patch3, the results match this monitor's results. It now actually matches the exe name (not just the process name). I'll leave this post if someone would like this for an old system, but if your system is current this monitor will not improve the results and I would suggest staying with the stock monitor. I got tired of the useless/wrong BAD PROCESS tickets created by the stock "PROC - Bad Processes Detected" monitor, such as the "Reg.hta" classification alerting when Reg.exe is found running. This SQL will clone the stock monitor (Or update itself if you run it again) targeting the same computer groups, and using the same alert settings. (If you have removed the stock monitor it will still create the new one but it will not target any specific groups). This monitor has been adjusted to only match when the process executable matches the Bad Process Executable name. In a perfect world it would match based on the entire path, but the process classification table only holds the EXE name. Still, this should be much more accurate, much less noisy, and when you re-classify a process you are doing for the EXE name, not just the process title. This SQL only creates/updates the new monitor, so don't forget to disable the alerts assigned to the stock monitor or you will get tickets from both of them. -

Version 1.0.0

29 downloads

The attached SQL will modify all dataviews that include a ticketid to also include the external ticketid as "Ticket External ID". I suggest copying one or a few of the stock Dataviews, and then running this. While it will update all dataviews with ticket information, many will be flagged as having changes the next time Solution Center is ran and may be reset to original. The copied Dataviews should preserve the additional column. Save the file and import into Control Center using Tools -> Import -> SQL File. It will ask if you want to "Import 1 Statement into the database". If it says there are many statements choose "No", you have an older (buggy) Control Center and you will need to import using SQLYog or some other tool. Keywords: remote monitor free drive drives disk disks volume volumes mounted mount point no letter space percent percentage -

The v_extradatacomputers reference is actually to a table, not a view. It is refreshed about twice an hour but is only rebuilt daily or perhaps weekly, meaning that new EDFs may take some time to appear there. #To rebuild the table (drop and rebuild the complete structure with all current computer EDFs as columns), run: call v_ExtraData(1,'computers'); #To refresh the table (update the values for existing columns in the table), run: call v_ExtraDataRefresh(1,'computers'); However, I have posted an update that no longer uses this table (avoids the build and view error, and is works immediately on live values) and also calls v_ExtraData for you to ensure the table is built correctly. The script should be the same, just download the new file and import the .SQL. It will automatically update the existing monitor for you!

-

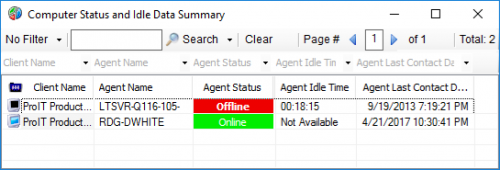

Agent Status and User Idle Time Dataview

DarrenWhite99 commented on DarrenWhite99's file in SQL Snippets

-

That makes me think that the file was saved in the wrong format. I re-downloaded the .ZIP and opened the file in Notepad, and when I selected "Save As" the type was UTF8. I switched it to ANSI and saved a copy. The file was 1 byte shorter. I didn't try comparing again, I just went ahead and re-uploaded. Try downloading it again, or just open your copy in Notepad and save as "ANSI". Note: Any agent that has already used this monitor will already have a copy of it, which must be deleted before it will be downloaded again. I have another solution that helps with this, comparing the agent copies of files in the Transfer\Monitors folder against the versions on the server and deleting any that are found to be a different size. This keeps remote agents current without you having to do a bunch of manual work anytime you change a remote monitor's file. See https://www.labtechgeek.com/topic/3243-solution-to-keep-exe-remote-monitors-up-to-date-with-the-server/

-

Version 1.0.1

422 downloads

This Dataview is basically the same as the "Computer Status" Dataview, but I have added a column for Agent Idle Time. I find it helpful when I need to see quickly which users are on their systems, and which machines are not being used or have long idle times so that I can work without disrupting an active user. I have added another column, `Agent Additional Users`. This shows any other logins reported by Automate, such as on a Terminal Server. For only a couple of columns difference, I have found it to be a very useful dataview and refer to it often. To import, just extract the .sql file from the zip. In Control Center select Tools->Import->SQL File. It will ask if you want to import 3 statements. This is normal. When finished, select Tools->Reload Cache. -

Version 1.0.2

520 downloads

This is a script to test and create or reset the Cache/Location Drive user account credentials and Location Admin credentials. If the credentials are domain based (domain\username, username) they will be tested but will not be reset. If the cacheuser is defined, cannot be validated, and is a local account (.\username) it will be created if missing and the password will be reset. After testing the cache user credentials, the Location Admin will be tested. If it cannot be validated and if it is a local account it will be created if missing, the password will be reset and it will be added to the local Administrators group. The password is always set not to expire if it is being reset. This script relies on the correct Location configuration of the "Login for Administrator Access" the under the "Deployment and Defaults" tab to obtain the Admin credentials. The Cache User credentials are specified by the Location Drive settings on the General tab for the Location. -

Version 1.0.0

174 downloads

You can't directly run .ps1 files in remote monitors like you can .vbs and .bat files. But this applies beyond just remote monitors, .ps1 doesn't run universally like .vbs or .bat. A way to get PowerShell portable is to use a .vbs or .bat file to carry the PowerShell script. Here are a couple of ways you can do this: The first is a generic way to embed text files inside a batch script. The embedded files are extracted and saved apart from the script. This example has two simple text files included. (See BATCH-WITH-EMBEDDED-FILEs.bat) The second script method is a way to include PowerShell code directly inside a batch file. This can run anywhere like a batch and the PowerShell is interpreted directly without creating any secondary files. There is a trade-off, the output will not report the correct line number for any failures, and a script crash can result in no useful output. (Output is buffered. Write-Host will be output immediately. Write-Output will buffer until the script completes.) For this reason it is harder to develop and debug PowerShell wrapped in a batch file. So this method works best when you develop and test a PowerShell script as a separate file, and then simply dump the contents to the end of the Batch framework script. (See BATCH-WITH-EMBEDDED-POWERSHELL.bat) I use method 1 when I need to include standalone files and want to move them all with one file. (An example is a batch script that imports a trusted publisher certificate. The certificate is carried inside the batch, but needs to be its own file for importing by certutil.) I use method 2 anytime I want a batch to run PowerShell. Several of my remote monitors in LabTech are .bat wrapped PowerShell scripts. I don't like creating a batch file that only turns around to create a separate PowerShell file. You have two files to clean up, etc. Aside from the starter scripts, I included examples of how I have used both methods in real life.